Master Context Switching: Boost Efficiency in Multitasking Environments

In today's fast-paced digital world, mastering context switching is crucial for developers, system administrators, and anyone juggling multiple tasks. This skill allows you to minimize performance overhead while maximizing efficiency. Whether you're optimizing code or simply trying to manage your daily workflow better, understanding how systems switch between tasks will give you a competitive edge. Let's dive deep into the mechanics and strategies of effective context switching. View original learning path

Step 1: Understanding the concept of context switching

Context switching refers to the process where a computer's CPU switches from executing one task (or process) to another. Imagine you're working on two projects simultaneously - your brain needs to 'save' the current state of one project before focusing on the other. Computers do this at lightning speed. Reasons for context switching include handling interrupts, time-sharing between processes, or when a higher-priority task emerges. However, frequent switching comes with a cost - each switch requires saving and loading states, creating overhead that can impact overall system performance.

Step 2: Understanding the basics of multitasking

✨ Build your personalized learning path in seconds with AI

Try SkillAI for Free →Multitasking is what makes context switching necessary. It's the ability of an operating system to execute multiple tasks concurrently. There are two main types: cooperative multitasking (where tasks yield control voluntarily) and preemptive multitasking (where the OS controls task switching). Scheduling algorithms like Round Robin or Priority Scheduling determine which task gets CPU attention next. Understanding these helps you predict and optimize when context switches will occur in your system.

Step 3: Understanding the role of threads

Threads are the vehicle through which context switching happens. A thread is the smallest sequence of programmed instructions that can be managed independently. Threads within a process share resources but have their own execution state (running, ready, blocked, etc.). Thread synchronization mechanisms like mutexes or semaphores prevent conflicts when threads access shared resources. Mastering thread states is key to minimizing unnecessary context switches.

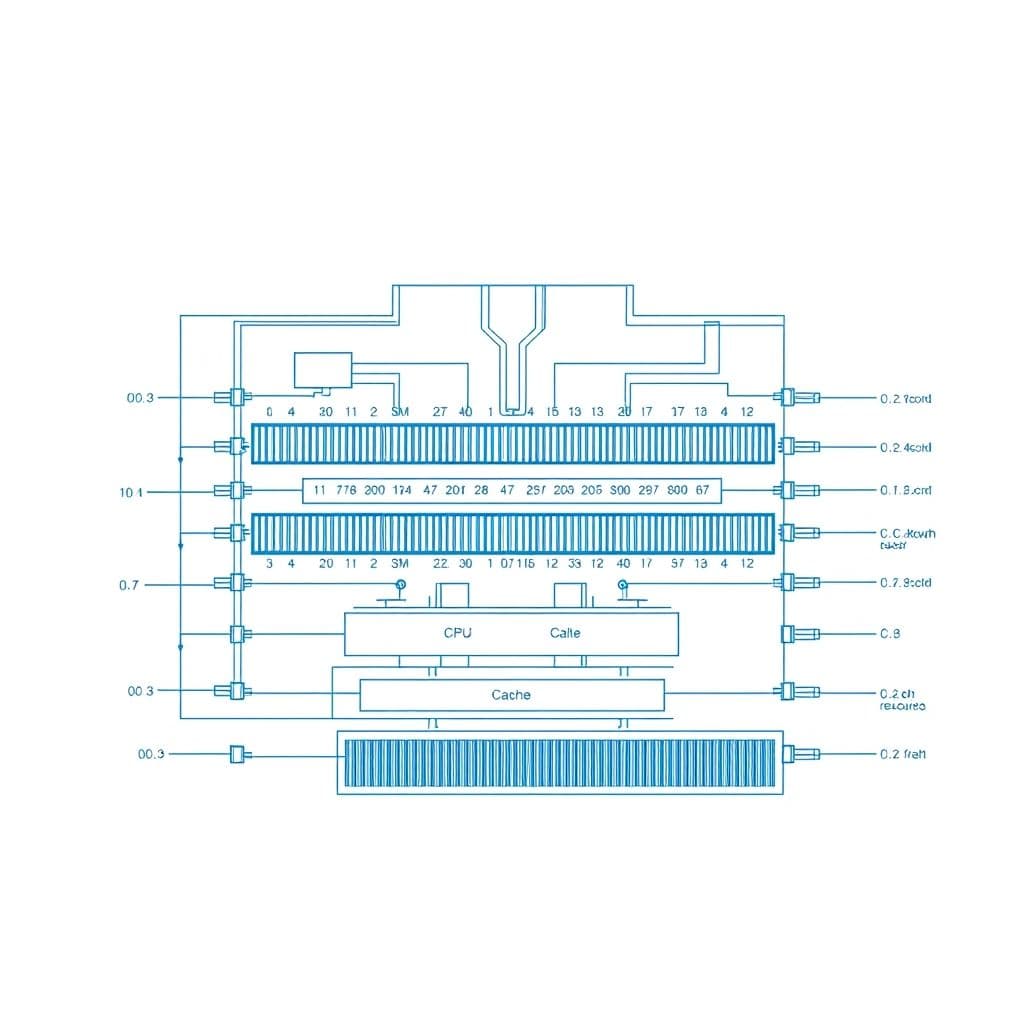

Step 4: Understanding the context switching process

The actual context switching process involves several precise steps: 1) Saving the state of the current task (register values, program counter), 2) Updating process control blocks, 3) Loading the new task's state. Interrupt handling often triggers switches - when hardware signals need attention. The overhead includes time spent saving/loading states and cache misses (when the new task's data isn't in cache). Advanced systems use techniques like thread pools to reduce this overhead.

Step 5: Practicing context switching

Now for the hands-on part! Implement basic context switching in a language like C or Python using threading libraries. Measure performance impact by comparing task completion times with different switch frequencies. Optimization techniques include: reducing shared resource contention, grouping related tasks, and adjusting thread priorities. Remember - the goal isn't to eliminate switches (that's impossible), but to make them as efficient as possible for your specific workload.

Conclusion

Mastering context switching transforms you from a passive observer to an active optimizer of system performance. You've learned the theory behind task switching, how threads enable concurrency, and practical optimization techniques. This knowledge is invaluable whether you're developing high-performance applications or simply trying to understand your computer's behavior better.

Ready to put this into practice? Try implementing a simple thread switch in your favorite programming language and share your performance observations below!

🚀 Create Your Free Learning PathFrequently Asked Questions

- How much performance overhead does context switching typically create?

- It varies by system, but each switch can take anywhere from microseconds to milliseconds. While this seems small, frequent switching in performance-critical applications can add up to significant overhead.

- Can I completely avoid context switching in my programs?

- Not in any practical system. Even single-threaded applications experience switches when handling I/O or system interrupts. The focus should be on optimization rather than elimination.

- What's the difference between process and thread context switching?

- Process switches are heavier as they involve changing memory spaces, while thread switches within the same process are lighter since they share memory. Thread switches are generally 2-10x faster than full process switches.